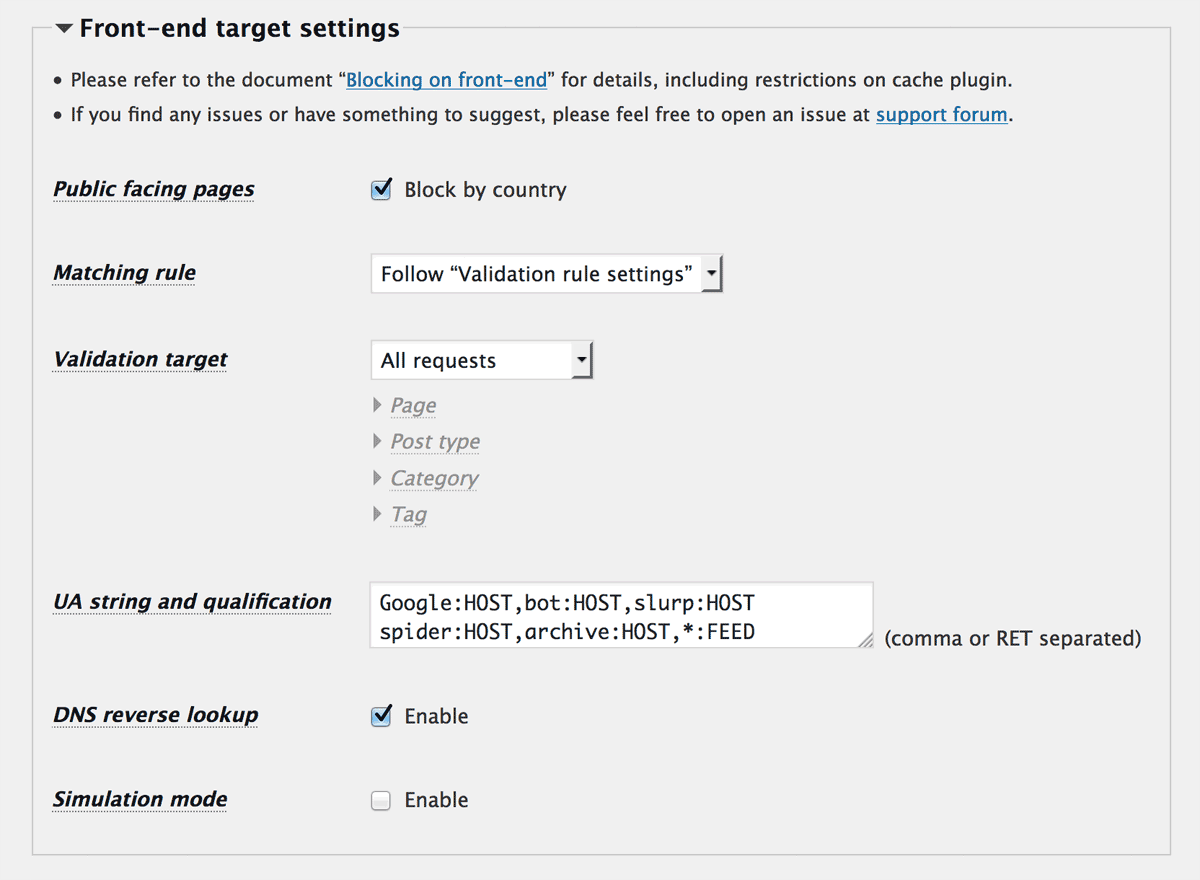

In this section you can set up rules to block access to the public facing pages (aka front-end) from undesired countries.

For spammers, this plugin can reduce both the load on the server and the amount of comment spams by preventing comment form acquisition on the front-end. Against attacks targeted at vulnerabilities in themes and plugins, this plugin can also reduce the risk of hacking sites such as malware installation.

In general, it is difficult to filter only malicious requests from all requests unless you restrict content by region, but with the combination of rules in “Validation rule settings”, unnecessary traffic for your site and risks can be reduced considerably.

Public facing pages

Turn on “Block by country” when you do not want traffic from the specific countries. Even when you enable this option, “Whitelist/Blacklist of extra IP addresses prior to country code”, “Bad signatures in query” and “Prevent malicious file uploading” in “Validation rule settings” section are effective.

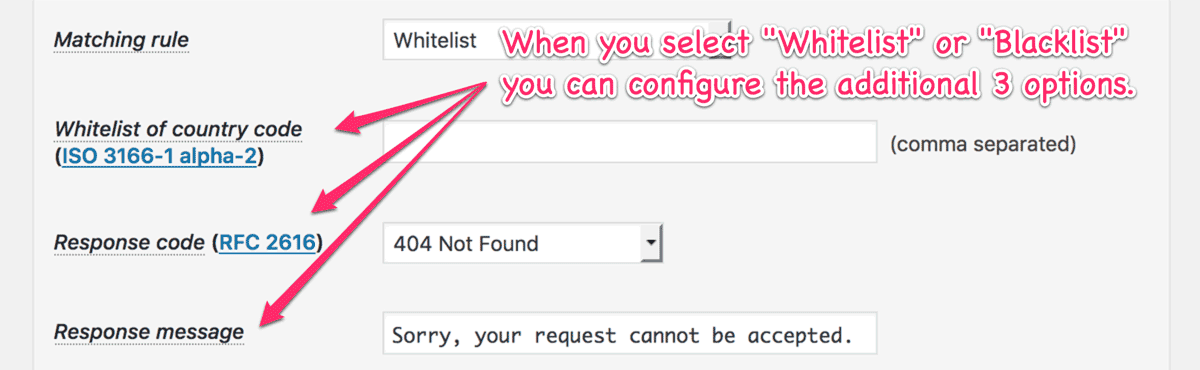

Matching rule

You can select one of these:

- Follow “Validation rule settings”

- Whitelist

- Blacklist

When you select Whitelist or Blacklist, you can configure a different set of country code and response code from “Validation rule settings” section.

If blocking by country is inappropriate for your site or if you want to block only specific bots and crawlers, you can leave “Whitelist of country code” empty to apply only a set of rules under “UA string and qualification”.

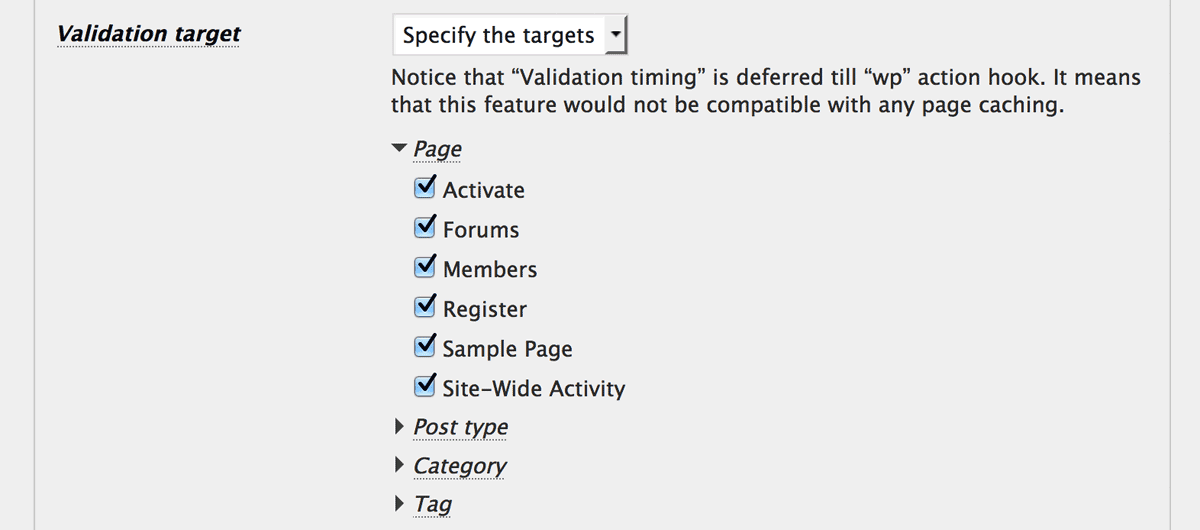

Validation target

You can select one of the followings:

-

All requests

Every request to the front-end will be validated as a blocking target. This can be compatible with some caching plugins under certain conditions. -

Specify the targets

You can specify the requests for the page, post type, category and tag on a single page or archive page as a blocking target. This ignores the setting of “Validation timing” to get those information from the requested URL. That means the validation is always deferred utilwpaction hook fires, and also lose the compatibility with page caching. Note: Even if you specify all the targets here, attacker can still access the TOP page because it belongs to neither single page nor archive page. Therefore, when you intend to validate all requests, you should select “All requests“.

Note: Even if you specify all the targets here, attacker can still access the TOP page because it belongs to neither single page nor archive page. Therefore, when you intend to validate all requests, you should select “All requests“.

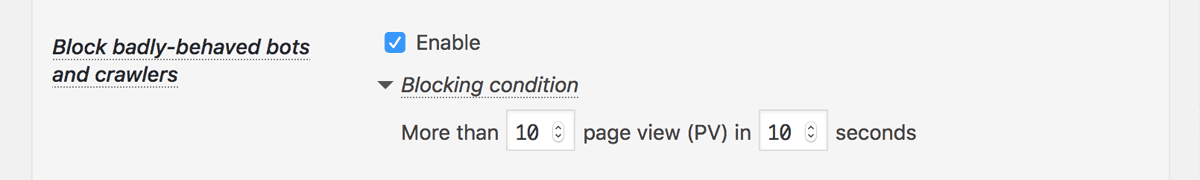

Block badly-behaved bots and crawlers

Block badly-behaved bots and crawlers that repeat many requests in a short time. Make sure to specify the observation period and the number of page requests to the extent that impatient visitors do not feel uncomfortable.

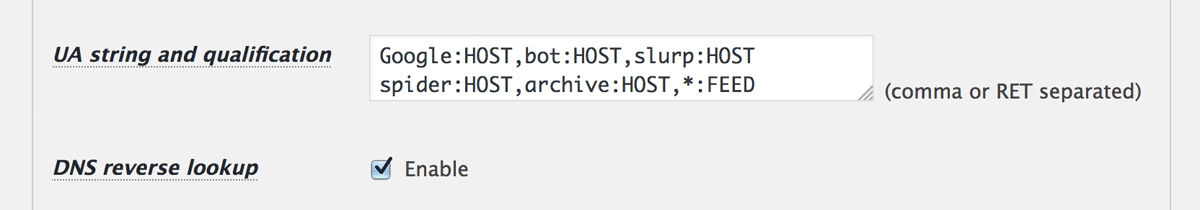

UA string and qualification

You can configure the rules to qualify valuable bots and crawlers such as google, yahoo and being OR the rules to block unwanted requests that can not be blocked by country code, giving a pair of “UA string” and “qualification” separated by an applicable behavior which can be “:” (pass) or “#” (block).

See “UA string and qualification” for more details.

- Reverse DNS lookup

In order to make use ofHOSTin “qualification”, you should specify this option to get the host name corresponding the IP address. If it is disabled,HOSTandHOST=…shall always be deemed as TRUE.